Tutorial

INTERACT 2023

19th International Conference of Technical Committee 13 (Human-Computer Interaction) of IFIP (International Federation for Information Processing).

August 31st, 2023 - York, United Kingdom

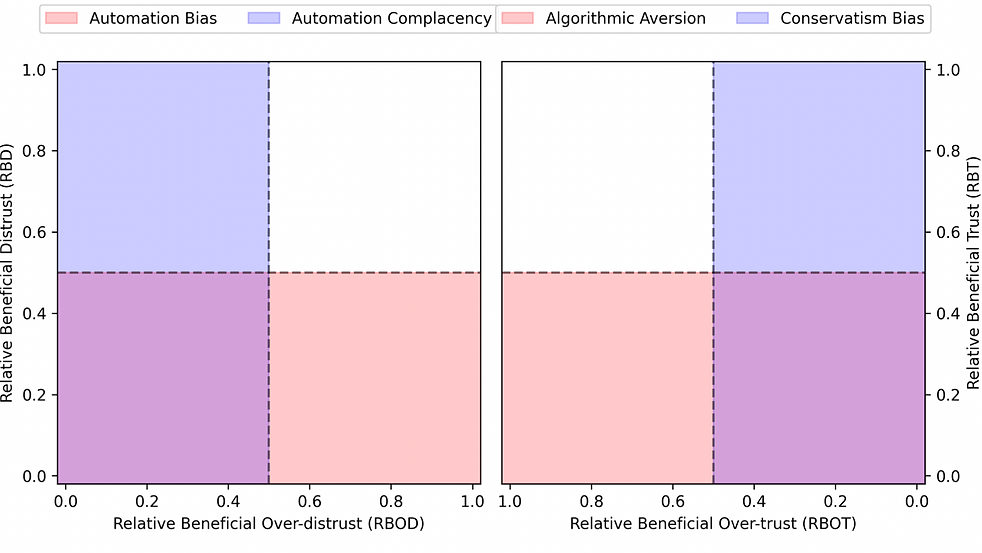

Figure 1. An empty Reliance Pattern Diagram

In this course, we will focus on the fit between human decision makers and AI support in classification tasks, in order to assess the extent humans rely on machines, and the effects of this relationship in the short term of decision efficacy and confidence (purposely neglecting any long-term effect, such as complacency [1] and deskilling [2]). In particular, we will introduce and illustrate a general methodological framework that we recently proposed in [3] to gauge “technology dominance” [4]: the dominating influence that technology may have over the user, which allows the user to take a more subservient position — in essence, the user deferring to the technology in the decision-making process.

Technology dominance is "the dominating influence that technology may have over the

user, which allows the user to take a more subservient position — in essence, the user

deferring to the technology in the decision-making process."

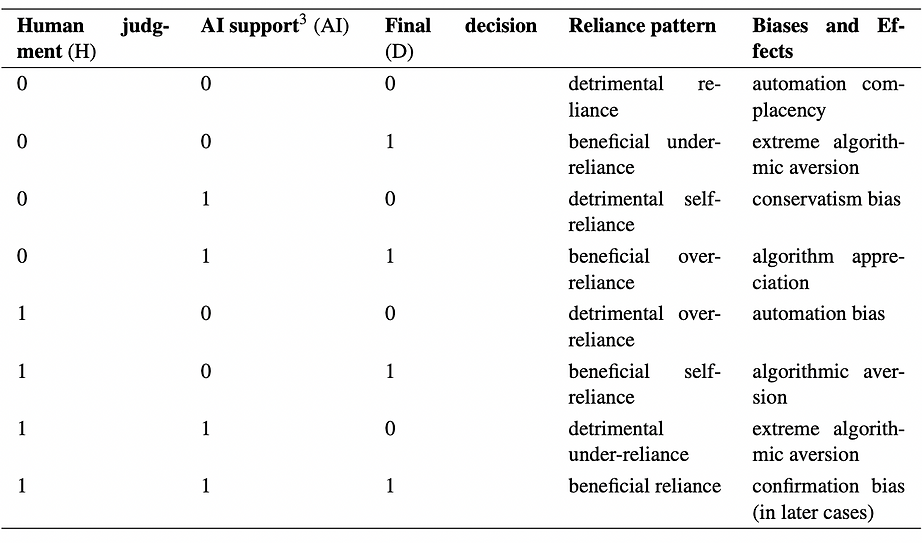

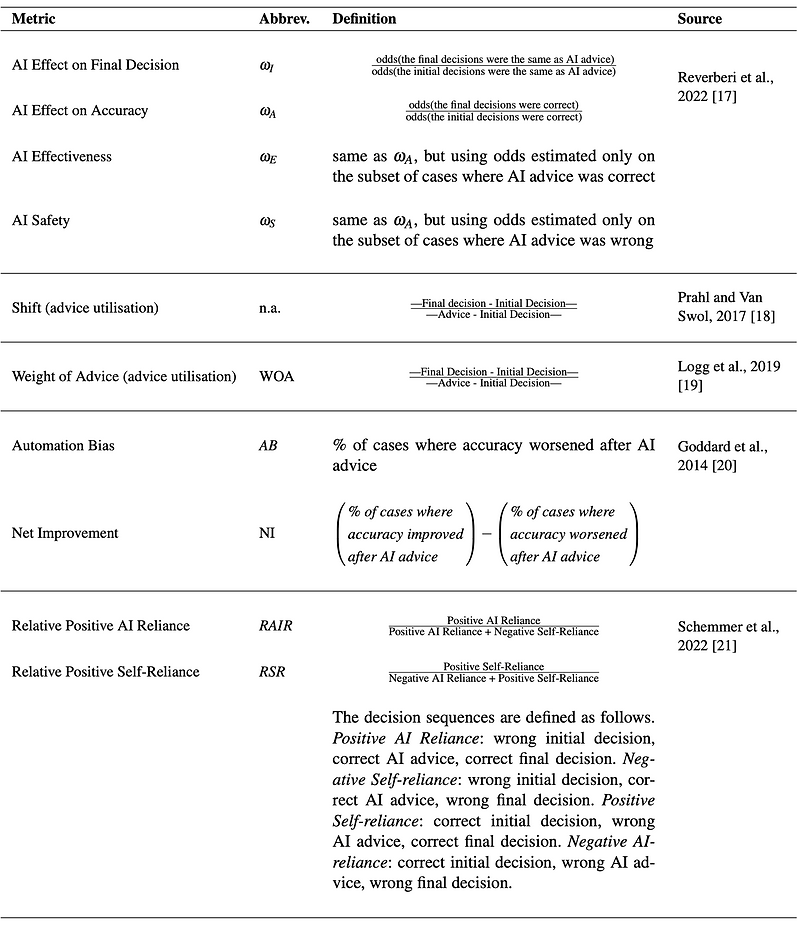

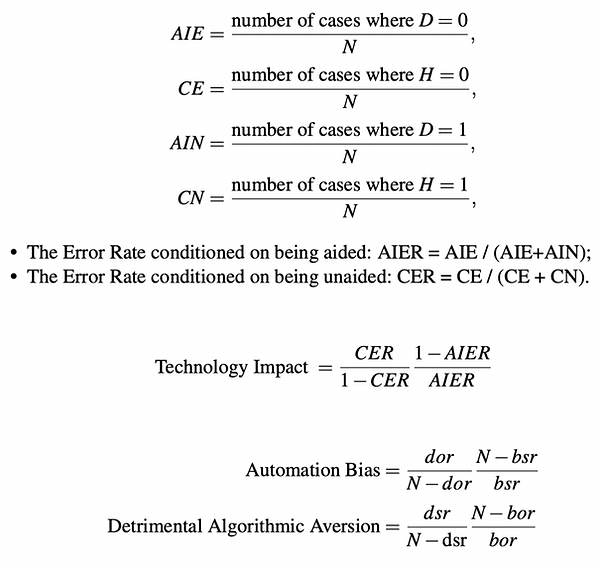

To this aim we will present, and discuss the rationales behind, the framework of the reliance patterns (see Table 1 and Figure 2), that allows to distinguish between positive and negative dominance, and the related biases, such as au- tomation bias, algorithm appreciation, and a phenomenon that deserves more attention: what we defined as the white box paradox (shortly put, whenever au- tomation bias is influenced by the provision of explanations [5]). We will then illustrate a set of methods for the quali-quantitative assessment of technology dominance, which encompass both metrics and data visualizations (see Figures 1 and 2): we will also distinguish between a standard statistical approach and a causal analysis-based approach that can be applied when additional information is available about the context of interest. Finally, we apply these methods in case studies from a variety of settings, by also providing open source software and tools that we developed to this purpose to be adopted by the community of interested scholars and researchers. A final roundtable and wrap-up discussion among the participants and organizers will conclude the work of the tutorial about what it means to have a quali-quantitative assessment of human reliance on AI and future research within the INTERACT community.

Table 1. Definition of all possible decision- and reliance-patterns between human decision makers and their AI system. In the first three columns, 0 denotes an incorrect decision, and 1 a correct decision. We associate th attitude towards the AI in each possible decision pattern, which leads to either accepting or discarding the AI advice, and the main related cognitive biases.

We will then illustrate a set of methods for the quantitative and qualitative assessment of technology dominance,

which encompass both metrics and data visualizations

(see the tables and figures below).

Table 2. Literature review of metrics useful for the assessment of technological dominance

Figure 2. The metrics developed by MUDI Lab for the assessment of Human-AI reliance

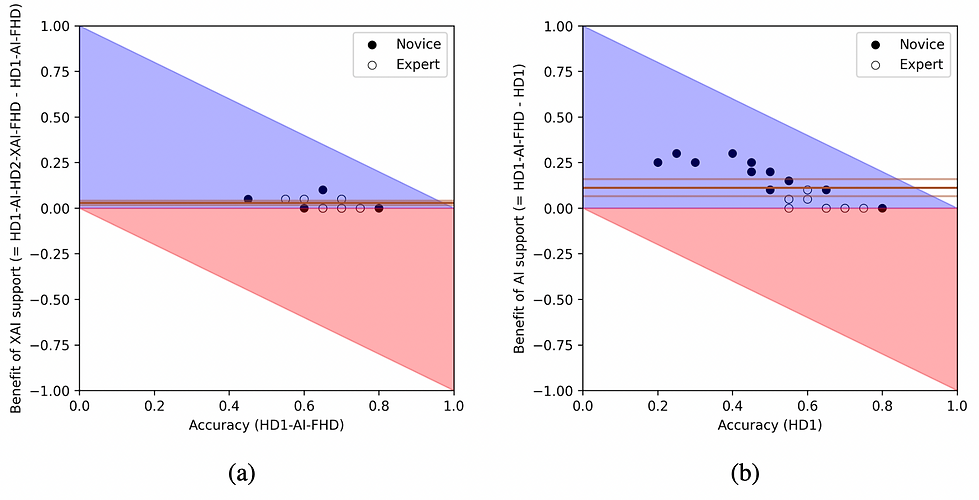

Figure 3. Example of Benefit Diagrams to visually evaluate the benefit

coming from relying on AI (a) and XAI support (b), respectively.

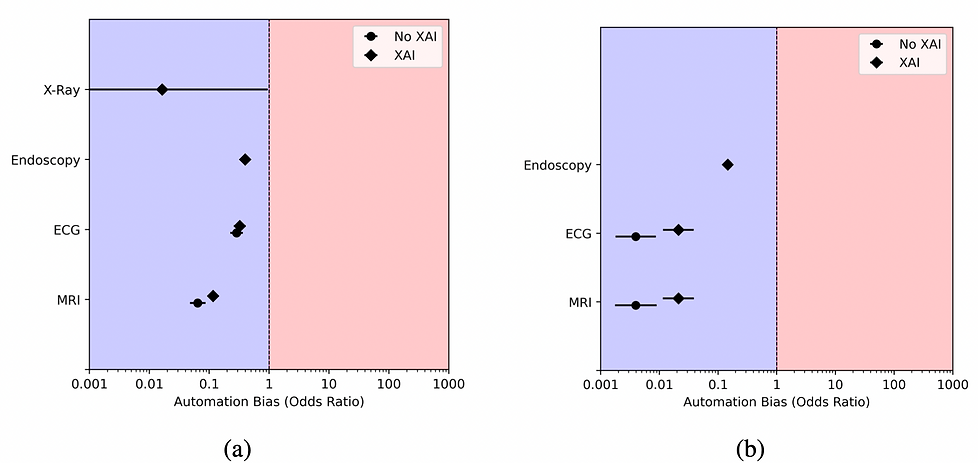

Figure 4. An example of Automation Bias odds ratios, for the 4 considered case studies:

on the left, (a) the frequentist metric; on the right, (b), the causal metric.

Duration: 1h 30m

Intended audience: Both scholars and practitioners at all levels of expertise can benefit from this course.

References